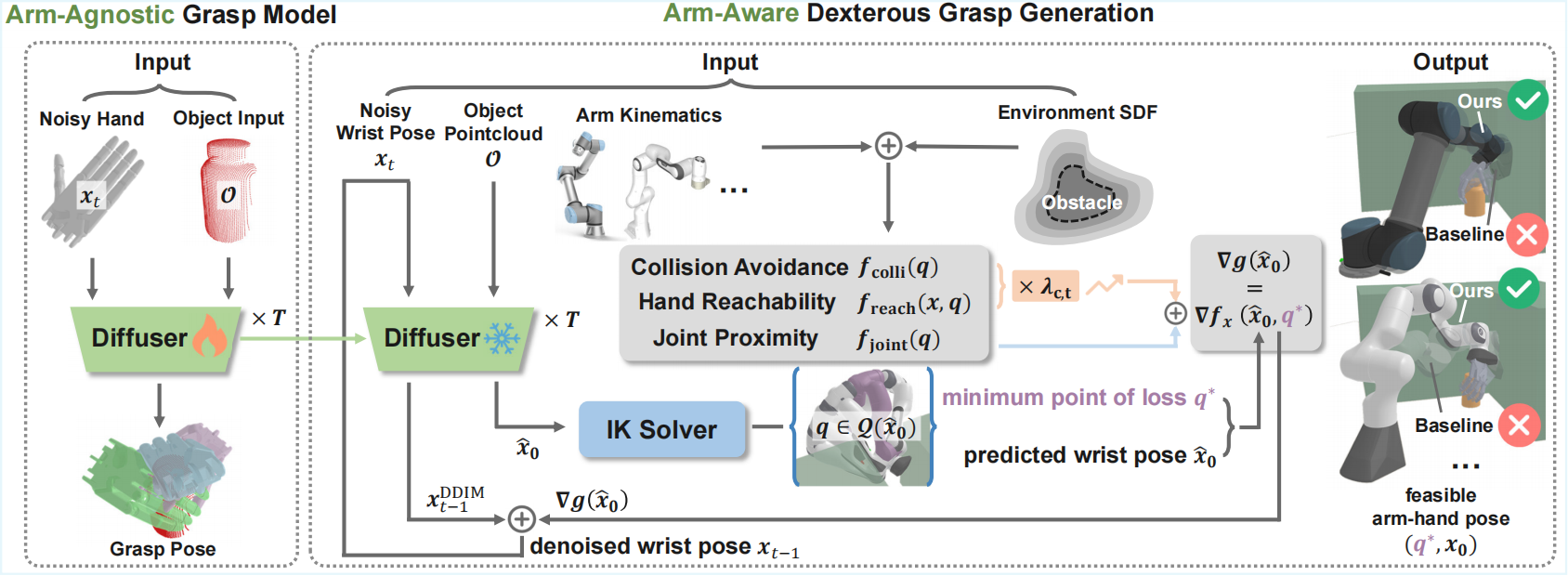

Method Overview

Overview of the proposed arm-aware dexterous grasp generation method. Initially, we pretrain an arm-agnostic diffusion model to capture the distribution of wrist poses for floating hands. During sampling, arm kinematics and environment SDF are integrated as constraints, with their gradients guiding the denoising process. This approach significantly enhances the proportion of feasible grasps, adaptable to various arm-hand configurations and constrained environments.

Key Novelties

The key contributions and novelties of our approach beyond existing methods include:

- We formulate arm-aware grasp generation as a joint optimization of grasp pose and arm configuration, deriving its relation to guided sampling on the pre-trained arm-agnostic grasp diffusion model with added arm constraints.

- We derive analytical forms and gradients for three commonly used arm-related constraints (collision avoidance, hand reachability, and joint proximity) to create the gradient for guidance, addressing the complex mapping between joint-space constraints and Cartesian-space denoising.

- We design comprehensive benchmark scenarios for simulation and real-world evaluation, featuring high obstacle coverage and grasps near arm limits, which thoroughly verifies that our method generates successful grasps that satisfy constraints with a significantly higher probability than the commonly used rejection sampling strategy. The proposed approach is applicable to various robotic arms (e.g., UR5 and Franka) and environments, utilizing a single hand-centric grasp generation model.